Restoring Voices and Identity with Neuroengineering

Electrical and computer engineering graduate student part of project to return speech to those who have lost it

Every year, nearly 1 million people worldwide are diagnosed with head and neck cancer. Many lose their ability to speak intelligibly due to surgical removal of — or radiation damage to — the larynx, mouth and tongue.

These people can learn to talk again using devices that emit artificial sounds, which they can shape into words, but their new voices are often weak, mechanical or distressingly unfamiliar.

Electrical and computer engineering graduate student Harsha Gowda is working to restore natural speech in such individuals in a project with Professor Lee Miller, part of the Department of Neurobiology, Physiology and Behavior at the University of California, Davis.

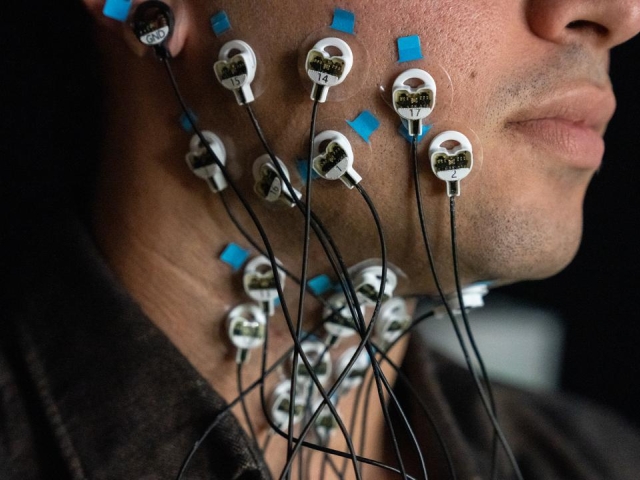

The team is training a model capable of translating electromyographic, or EMG, signals into personalized speech at the phonemic level — the way someone says “a” in apple, for example. EMG signals are generated by muscle contractions, like those around the jaw and neck, and are important for creating a replication of one’s voice due to their ability to reveal the relationship between speech and the human body.

This approach is unique from traditional speech processing, which uses audio as the only input.

"In speech processing, convolutional neural networks work well because audio can be thought of as a signal on a one-dimensional grid, where patterns evolve smoothly over time,” Gowda said. “However, this audio-only approach makes it difficult to extract meaningful representations of someone’s voice, as the method struggles to capture relationships between speech and different muscle activations, particularly when compared to the more complex information gleaned from EMG signals."

While EMG signals provide richer data from which to model personalized speech patterns, they are difficult to work with. These biological signals produce mountains of data per second, which computers need to be able to process quickly.

The team developed a workaround by using only tiny bits of the incoming signals while ignoring everything else. Rather than tracing the chaotic ups and downs of each electrode measuring EMG signals on a person’s face, Gowda employed a strategy that measures signal relationships between various pairs of electrodes.

In addition, Miller and Gowda have designed neural networks for their model to analyze EMG signals through their covariance structure, leveraging a mathematical framework based on symmetric positive definite matrices. This approach allows their model to better capture the complex interdependencies between muscle activations and voice.

"As we move forward, we plan to integrate video modalities into our paradigm, incorporating lip movement analysis captured via smartphone cameras. This multimodal approach will enhance the system's robustness in translating silently voiced articulations into audible speech," Gowda said.