Engineers, Neurosurgeons Help Restore Autonomy for People with Paralysis

New research proves brain-computer interfaces for speech support cursor control, paves way for more complex, feature-rich technology

In a world first, a team of engineers, neuroscientists and neurosurgeons at the University of California, Davis, and UC Davis Health has demonstrated that brain-computer interfaces (BCIs) for translating brain signals into speech can also enable control of a computer cursor.

The team’s multimodal BCI returns one’s ability to speak and move a cursor or click on objects on a computer screen without assistance. Their findings, published in the Journal of Neuroengineering, pave the way for feature-rich, multimodal BCIs that restore functions to people with paralysis — and a level of autonomy previously thought impossible.

“This is the first demonstration of a BCI that supports both speech and cursor-based computer control,” said Tyler Singer-Clark, a biomedical engineering Ph.D. student and first author on the paper. “Future steps in multimodal BCIs could include gesture decoding for all sorts of different things, enriching the types of interactions someone with paralysis can have with their environment beyond speech.”

Singer-Clark is a member of the UC Davis Neuroprosthetics Lab, which is co-directed by neuroscientist Sergey Stavisky and neurosurgeon David Brandman. Under Stavisky and Brandman, the lab previously created the most accurate BCI for speech ever recorded, an innovation which Singer-Clark’s project builds upon.

Advancing the Standard

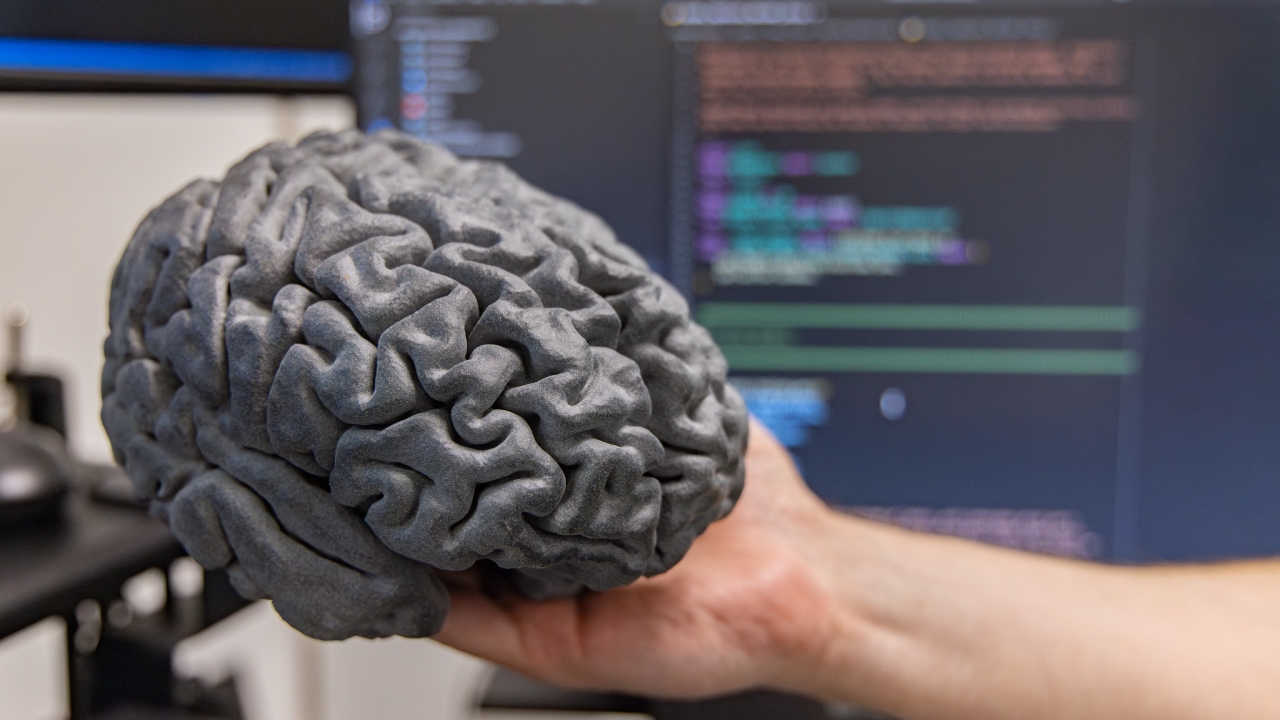

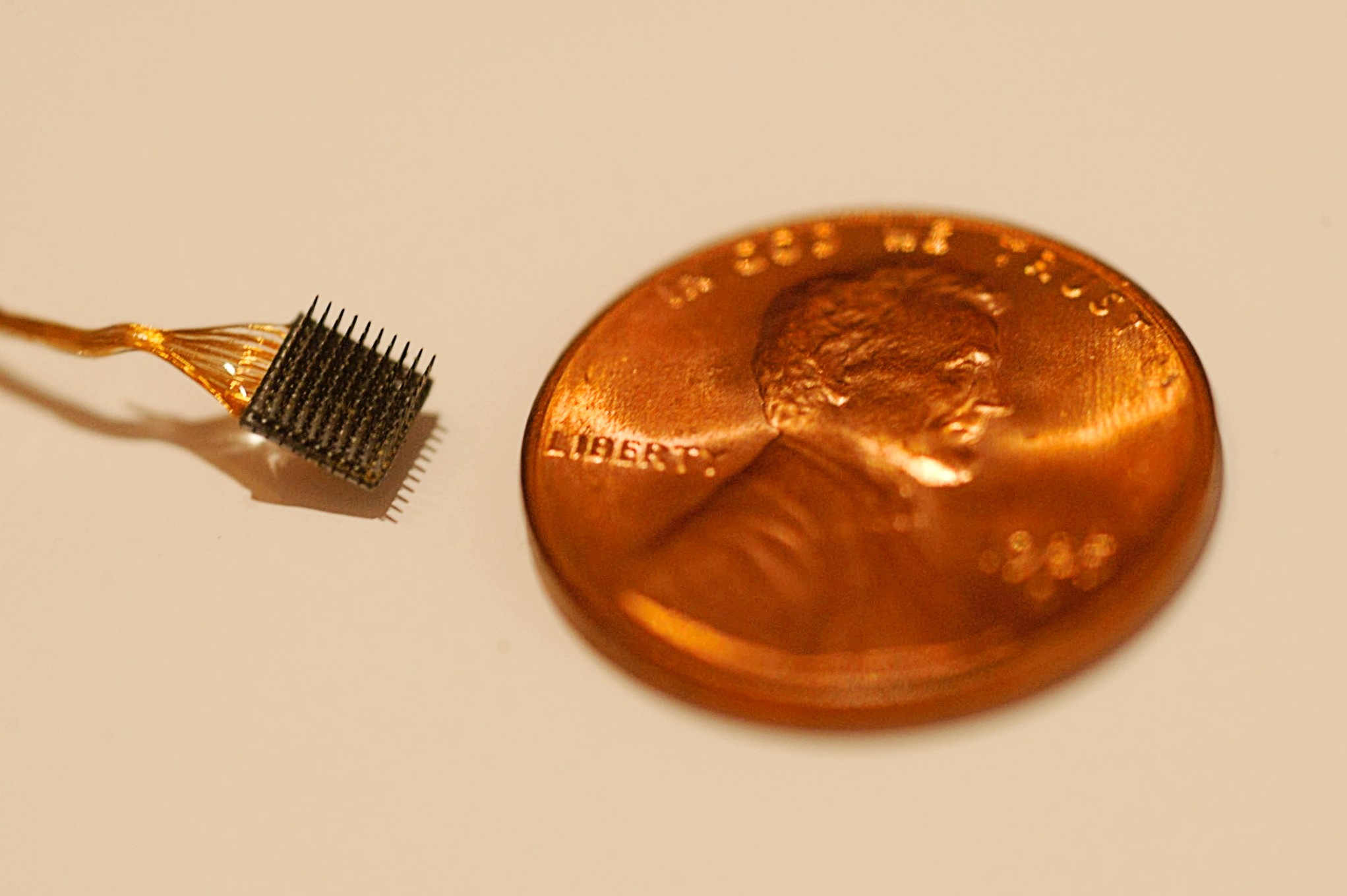

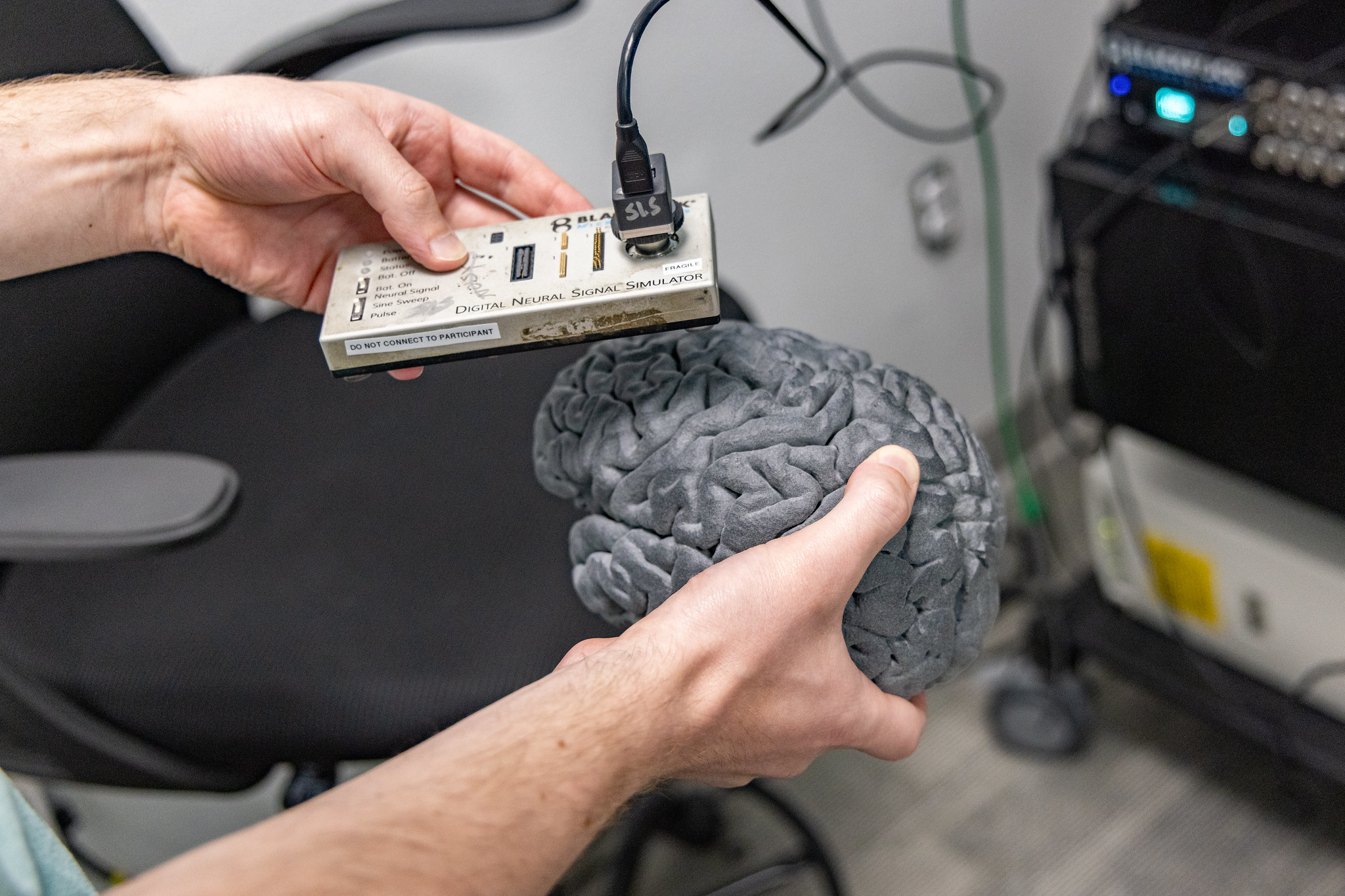

The lab’s BCI uses a recording device comprising four electrodes, each with 64 recording sites, developed by Blackrock Neurotech. This device records brain signals, with the team choosing where to implant and building software that enables functionality.

Their speech BCI, implanted in the speech motor cortex, interprets electrical activity generated by thinking and outputs the activity into recognizable words displayed on a computer.

The team found something interesting about their speech BCI: The part of the brain it was analyzing for speech showed signs that it could support control of a cursor, a motor ability typically associated with a different area of the brain.

Mixing of cognitive abilities across grey matter (science has historically understood neural functions as siloed in certain regions of the brain) is not necessarily a new observation, as previous research has shown that the neurons responsible for hand movement can support speech in a limited capacity. However, this blurring and blending of cognitive space had never been demonstrated in the speech motor cortex, which is an emerging focus of research in BCI due to the transformative effects it can have on individuals with paralysis.

Engineering Digital Architectures

Following the team’s observation, Singer-Clark began work on cursor control software for the speech motor cortex BCI.

A big ask for a biomedical engineer, but Singer-Clark was prepared for this moment. He received his undergraduate degree in computer science from MIT and held a job as a software engineer for five years before transitioning to BCI research. In fact, it was reading a blog post on how BCIs can give people with paralysis the ability to control a cursor or move a robotic arm that inspired his career shift and what ultimately brought him to UC Davis.

In 2023, he bolstered his neural data analysis and engineering skills as a fellow in the NeuralStorm program, a premier neuroengineering training program offered by the UC Davis Center for Neuroengineering and Medicine.

Still, coding for a BCI is complicated. It requires thinking through a decoding architecture that is able to interpret an individual’s brain activity and turn it into a useful and understandable action.

While no one had ever coded such a system for cursor control driven by the speech motor cortex, Singer-Clark looked to decades of prior research on cursor control driven by more traditional movement-related brain areas as a starting point. He was also able to base his architecture on the existing code used in the lab’s speech BCI.

“We didn't have to reinvent the pre-processing of the neural data,” he said. “For cursor control, it’s actually the same pre-processing steps the speech BCI uses to get the neural features that are going to be useful for decoding the intention of the participant.”

With this body of research and the BCI’s foundational code, Singer-Clark engineered a system for enabling cursor control in BRAND — a fast, modular and open-source software architecture for BCI functionality developed by the UC Davis Neuroprosthetics Lab and collaborators across the country.

Mapping Functionality

With the cursor control software complete, Singer-Clark’s last step was to individualize the decoding architecture.

He worked with the same man who had participated in the original speech BCI research as part of the BrainGate clinical trial. Due to amyotrophic lateral sclerosis — also known as ALS or Lou Gehrig’s Disease, a disease of the nervous system that leads to gradual loss of movement — the man is unable to move.

Singer-Clark had the man watch a cursor moving around a screen and selecting targets. As the man thought about and observed the movement of the cursor, Singer-Clark watched the man’s neurons firing. He was able to map this data, such as the specific neurons that activate when the man wants to move left, to the cursor control software.

Once the software was enabled for his implanted BCI, it took him less than 40 seconds to adapt to the cursor control functionality. Through thinking about moving the cursor, the man was able to see the cursor move on his computer screen. He was also able to click on apps and open links.

Singer-Clark clarified, however, that the BCI is not translating signals of abstract thoughts into movement.

“What information do those brain signals contain? They contain all sorts of things. A lot of people ask, ‘Oh, are they moving the cursor by thinking about the word ‘left’ or the word ‘right’?’ It's not that.”

For the man using the BCI, it’s something that feels second nature and almost unconscious, like the brain’s ability to automate breathing. Intuition, he said.

“That's his word, intuition. I'll say, ‘What motor imagery are you using?’ And he says, ‘Intuition.’”

“Singer-Clark’s work is incredibly important for the field,” said Brandman, one of the co-directors of the UC Davis Neuroprosthetics Lab. “He was the first to show that the speech part of the brain also has enough neural information about arm movements for us to incorporate into a brain-computer interface. His work has not only empowered our BrainGate2 participant to use a computer cursor with his thoughts but has also led the way for multiple companies in this space to design their clinical trials.”

An Autonomous Future

For Singer-Clark, the impact of this project is manifold.

On the one hand, this project is proof that complex, multimodal BCIs are feasible.

“The whole point of this study,” he said, “is that we're using the same set of electrodes for both speech decoding and cursor decoding. It hammers home a growing viewpoint that different body parts and their movements are represented in multiple areas of the motor cortex, as opposed to being all siloed in their own areas. There will be cool studies to come about BCIs capable of multiple modes, like our cursor control and speech BCI.”

On the other hand, there’s a very personal and powerful consequence of this research. There’s a man who has regained autonomy.

“There's a man with ALS who has a speech BCI and a cursor BCI who can control his computer independently without someone else helping him for hours and hours every day. It's like this great event, and we might not have tried if we didn't have that prior research encouraging us to do that.”

That’s what BCIs — and the UC Davis engineers, neuroscientists and neurosurgeons advancing the technology — are pushing for: A future where people with paralysis can gain a level of autonomy previously thought impossible.