Good AI vs. Bad AI

Online life has become increasingly mediated by Artificial Intelligence (AI).

Nearly 70% of all videos watched on YouTube are recommended by its AI algorithm, and that number is even higher on social media services like Instagram and TikTok. Though these AI algorithms can help users find content that’s interesting to them, they raise serious privacy concerns and there is growing evidence that people are being radicalized by some of the recommended content they consume online.

If this is what “bad AI” looks like, an interdisciplinary group of researchers at UC Davis are trying to develop “good AI” to push back against it and empower users with more control over their privacy and the content they’re recommended. Developing a holistic and customizable good AI is a difficult technical and social challenge, but one of the utmost importance.

“While working on the positive impacts of AI, I realized how it could also negatively impact societal and online usage patterns,” said Prasant Mohapatra, UC Davis vice chancellor, Office of Research and Distinguished Professor of computer science (CS). “Online sources are having a tremendous impact on our society and biases in the online algorithms will unconsciously create unfairness and influence the beliefs of people, as well as propagate false information and instigate divisions among various groups.”

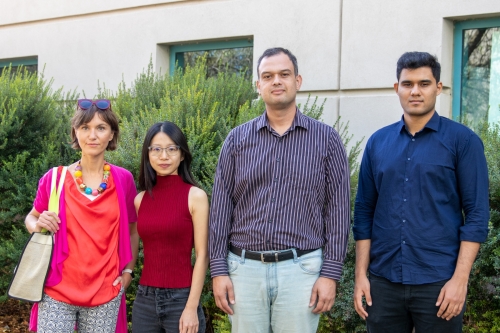

After receiving funding from the Robert N Noyce Trust to work on a project related to AI, cybersecurity, privacy and ethics for public good, Mohapatra and his colleagues—CS Associate Professor Zubair Shafiq and Professors Ian Davidson and Xin Liu, Communication Professor Magdalena Wojcieszak and graduate students in each lab—decided to tackle the challenge.

“This is not some hypothetical problem that we’re dealing with. This is a real, real problem in our society and we need to fix it,” said Shafiq. “Every possible angle from which we can approach this problem and make a dent in it, short term or long term, is absolutely important.”

More Transparent Algorithms

All major websites like YouTube, TikTok and Google have their own AI algorithms, and some even have multiple algorithms running at the same time. They can also interact with each other. If someone searches for new cars on Google, YouTube’s algorithm might notice this and show them car-related content next time they open their feed. This recommended content on YouTube could then influence recommendations on another website.

Most websites track users’ browsing activity to train their AI recommendation algorithms or sell the information to data brokers, who then combine it with publicly-available offline data to make inferences about a person’s interests. This helps advertisers place effective, targeted ads, but people don’t have control over what data brokers learn about them and what advertisements they see as a result.

Good AI’s job is to understand these complexities send out false signals that prevent bad AI from zeroing in on users’ interests, either to prevent harmful recommendations or to keep information private.

“One way to define privacy is the accuracy of certain attributes that someone infers about us—so you think you know something about me, but 80% of that is wrong,” said Shafiq. “Therefore, our goal is to maximize the inaccuracy of the inferences.”

The team’s first prototype does this for YouTube recommendations. After their recent study found that YouTube’s algorithm can potentially recommend increasingly extreme biased political content, the team built a tool that monitors the bias of recommended videos on a user’s homepage and injects politically-neutral video recommendations to help reduce the overall bias of their feed.

As they continue to develop that system, they are looking at the AI algorithms behind TikTok, Google and Facebook and plan to expand to more sites in the future. Not only will their studies help develop better good AI down the road, but it will also shed light on how these different websites use people’s data.

“These systems are opaque to the end user, who doesn’t really know what’s happening and what data they’re feeding off,” said Computer Science Ph.D. student Muhammad Haroon, who led the YouTube study. “One of the larger themes of this collaboration is to make these systems more transparent and give users a bit more control over the algorithm and what they’re being recommended.”

User-First Design

While it’s possible for an average user to manipulate algorithms in the same way good AI does, it requires more time, effort and education than most people have. Therefore, the easier and more seamlessly good AI can run, the more likely it is to be adopted.

“If the burden of using the tool is significant, the average user is just not going to use it,” said Shafiq. “If the good AI intervention can work automatically and baked into the devices, that is going to take the burden off the average user.”

The team also acknowledges that some people might want algorithms to recommend them relevant content, so they’re working to make good AI customizable. Shafiq’s vision is to eventually allow people to pick and choose which interests they want AI algorithms to learn about.

“We believe that every user has the right to control the algorithm that they’re interacting with,” he said. “This is quite challenging but technically achievable and something that we are actively working towards.”

Curbing the Wild West

Shafiq believes that ultimately, AI will be subject to government regulations, but it’s a slow process. Content creators rely on the algorithms to get views and targeted advertising is a $100 billion business that supports the platforms, advertisers and data brokers alike, which means companies don’t have much incentive to fix their AI algorithms.

However, with consequences like the growing antivaccination movement and the January 6th insurrection, Shafiq feels the team can’t wait for these processes to play out and hopes good AI is the short-term solution the world needs.

“Eventually, I think it is a public policy issue and we will, as a society, realize that that’s how we need to approach this, but in the meanwhile, we cannot let this be a wild west,” he said. “We’re working on this somewhat controversial idea not just because we want to do it, but because we believe we have a social responsibility to tackle this problem.”

This story was featured in the Fall 2022 issue of Engineering Progress.