Visualizing and Communicating Ambiguity for High-Stakes Collaboration

Researchers investigate how AI and machine learning can help in team-based decision-making scenarios like wildfire management

Consider this: A team of firefighters must decide how to treat a wildfire. They review various data sets, like satellite imagery, wildfire expert research and social media posts. Based on these data points, the team makes the best decision they can, but there is an element at play in this scenario called ambiguity.

Ambiguity occurs when people have multiple interpretations of the same data and analysis results. It can happen on the visualization side — for instance, a visual representation like a graph or chart may leave some data out and lead to misinterpretations if not annotated correctly. Ambiguity can also happen on the user side — colors, icons and shapes can have different meanings for different people based on their background and experiences. The addition of artificial intelligence and machine learning (i.e., the model could be biased or inaccurate) can complicate matters even further.

To learn more about why and how these ambiguities happen and find solutions to help alleviate them for high-stakes, team-based decision-making, Distinguished Professor Kwan-Liu Ma and Assistant Professor Dongyu Liu, both of computer science at the University of California, Davis, are collaborating on a new project funded by the National Science Foundation, or NSF.

Generational Collaboration

The project stems from Ma’s previous research on visualization and uncertainty. Also funded by NSF, the research involved Ma’s team identifying and quantifying uncertainty stemming from incomplete data, data with errors, or visual transformations. The team then designed different ways to convey the uncertainty with the visualization of the data.

Ma approached Liu, who joined UC Davis in 2023 and works in visualization and human-AI interaction for data-based decision-making, for this extended portion of the project that will focus on ambiguity and team-based decision-making to hopefully improve collaborative efforts.

“When you have a group of people working together, the communication between them is very important,” said Ma. “Then, when they try to communicate with each other about complex data or findings, oftentimes, they use some sort of visual form for the information. That can lead to ambiguity, multiple interpretations and uncertainty.”

Setting Goals

Ma and Liu have set three goals with this research. First, they will develop techniques to identify the sources of ambiguity in collaborative tasks that lead to high-stakes decision-making in emergency management domains like healthcare and wildfires. Some of those sources of ambiguity, as previously mentioned, could be inherent to the data or the visualizations, but they could also stem from interpretations of said data by AI or humans.

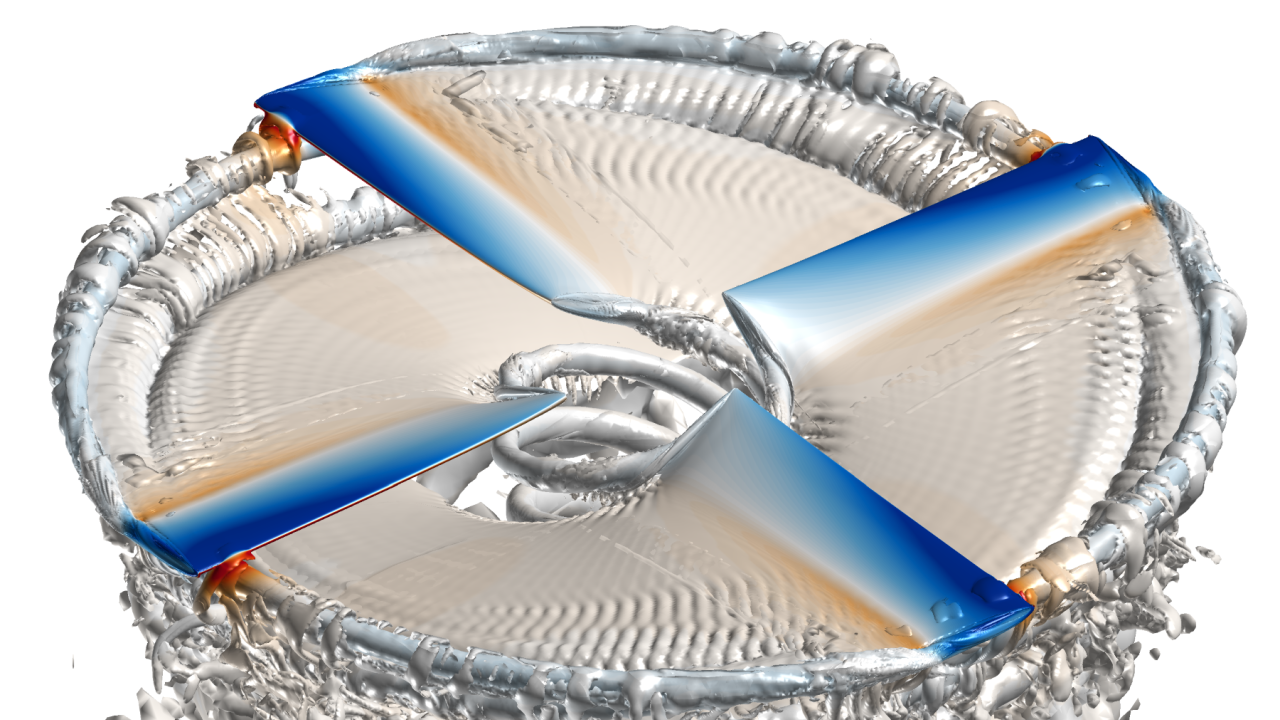

Secondly, the team will design new domain-relevant visual metaphors to represent ambiguity for more effective communication. A domain-specific metaphor might be using smoke in a visualization of fire intensity data or using water droplets and water bottles to convey information about the water system and water equity in California (one of Ma’s other projects).

“Whenever possible, we try to make the visualizations more interesting looking and, at the same time, meaningful to the viewer,” said Ma.

Lastly, Ma and Liu will develop techniques empowered by large language models to track, interpret and visually articulate the downstream effects of ambiguity on collaborative decision-making processes. One area Liu says they want to explore is a multi-modality foundation model.

“Let’s say you upload an image,” said Liu. “A multimodal model is able to generate and describe this image. Applied to visualization, you could read a chart using AI and gain some insight. We can probably take advantage of this capability to calibrate different insights coming from different people when they are interpreting the results.”

One scenario Liu sees AI coming in handy is within the team-based collaboration itself. In one of his projects, Liu uses machine learning to try to predict anomalous events by analyzing tens of thousands of signals from satellites.

The system, says Liu, is so large that the satellite engineers are not able to catch all types of errors. The predictive model aims to point out which components of this big system are showing abnormal behavior and at what time by narrowing down portions of data and components to locate any suspicious ones.

The team has an interactive interface based on the results where they can discuss and share insights to determine what the error could be and what the next actions are.

“We see a lot of disagreement between all of the [satellite] experts,” said Liu. “We hope to leverage large language models to help us summarize where the difference is coming from and maybe generate some reports for us.”

AI as Helper or Hinderance? Both?

While Ma and Liu plan to study AI and machine learning as solutions to potential ambiguities, they will also research them as sources of ambiguity. Trusting that a model was trained in a way that can avoid bias and is accurate is difficult under normal circumstances, but people in high-stakes situations may trust it even less.

The data, then, needs to be verified, and Ma and Liu will also try to understand how the AI models get results, determining how trustworthy AI-based information is and convey that to the user in a visual way. Because at the end of the day, it’s people who are still making these decisions.

“While AI/ML models are advancing very fast,” said Liu, “they are not able to — at least at the current moment — completely replace humans for decision-making, especially in high-stakes scenarios.”